Real time Automated Cinematography using Aerial Vehicles

People

Funding

This project is funded by the Netherlands Organisation for Scientific Research (NWO) Applied Sciences with project Veni 15916

More LinksAbout the Project

Cinematography and film-making is an application where robotics is getting increased attention. Often times movie directors make use of expensive gear like helicopters and high-end cameras for obtaining certain critical shots. Aerial vehicles can be a suitable alternative which help obtain the desired visuals while sticking to specific constraints outlined by the cameraman. This project focuses on this application and aims at developing a system of automated aerial vehicles which are capable of planning collision free paths in real-time. The generated methods have then been used for a fleet of aerial vehicles across a number of complex scenes to demonstrate the effectiveness of real-time automated drone cinematography.

The first contribution from this project was a model predictive control formulation for a single drone which could plan trajectories based on certain cinematographic constrainsts such as visibility of the actors and their screen positioning. The next step was to then integrate it for multi drones and ensure collision free trajectories. This is done by developing an algorithm that takes high-level plans alongside image-based framing objectives as input from the user and this can be updated in real-time. The algorithm uses this to generate collision free paths for each drone. The real-time nature of this algorithm allows for feedback incorporation while enabling visuals to be captured in cluttered environments with moving actors.

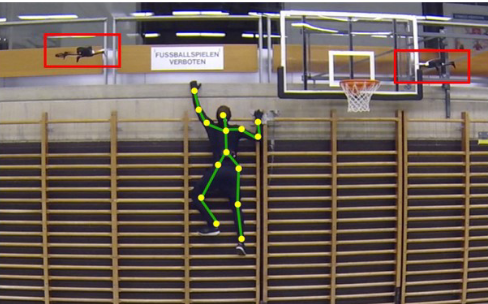

Another aspect of this project focused on human pose estimation using swarm of aerial vehicles to maximise visibility of the human from different viewpoints during long motion sequences and scenarios including jogging or jumping. The proposed method collects images from all drones, detects and labels 2D joint positions. It then estimates the joint positions of the human skeleton and optimizes the relative positions and orientations of the multi-robot swarm. Finally, it computes control inputs for the drones via model-predictive control (MPC) to keep the human visible during motion.

Project Demonstrations

Funding & Partners

This project was carried out in collaboration with ETH Zurich.

Related Publications

Flycon: Real-time Environment-independent Multi-view Human Pose Estimation with Aerial Vehicles

In , ACM Transactions on Graphics (SIGGRAPH Asia),

2018.

Real-time Planning for Automated Multi-View Drone Cinematography

In ACM Transactions on Graphics SIGGRAPH, vol. 36, no. 4, Article 132,

2017.

Real-time Motion Planning for Aerial videography with Dynamic Obstacle Avoidance and Viewpoint Optimization

In , in IEEE Robotics and Automation Letters, vol. 2, no. 3, pp. 1696-1703,

2017.