Game-Theoretic Motion Planning for Multi-Agent Interaction

People

Funding

This project is funded in part by the National Police (Politie) of the Netherlands.

More LinksAbout the Project

In order for robots to be helpful companions in our everyday lives, they must be able to operate outside of cages, alongside humans and other robots. This achievement would allow to deploy robots in a much greater set of applications than the ones that we seeing today with opportunities ranging from from autonomous driving in urban dense urban traffic to automation of hospital logistics. A key requirement to achieve this goal is the problem of safe and efficient motion planning in interaction with other decision-making entities. This requirement poses a particular challenge when the robot cannot directly communicate with other agents. In such a scenario, it is of utmost importance that autonomous agents understand the effect of their actions on the decisions of others.

A principled mathematical framework for modeling such interaction of multiple agents over time is provided by the field of dynamic game theory. In this framework, agents are modeled as rational decision-making entities with potentially differing objective whose actions affect the evolution of the state of a shared environment. The flexibility of this framework allows to capture a wide range of aspects and challenges common to real-world interactions, including noncooperative behavior, bounded rationality, and dynamic evolution of potentially imperfect and incomplete information available to each player. When solved to a generalized equilibrium concept, these problems can not only account for interdependence of preference but also for interdependence of feasible actions, e.g., collision avoidance constraints. This vast modeling capacity makes dynamic game theory an attractive framework for autonomous planning in the presence of other agents.

The goal of this project is to push the state-of-the-art in motion planning for multi-agent interaction by combining game-theoretic approaches with learning-based techniques. Through this combination, we aim to develop algorithms that admit autonomous strategic decision-making in realis- tic real-world scenarios with limited computational resources.

Project Demonstrations

Funding & Partners

Related Publications

Auto-Encoding Bayesian Inverse Games

In 16th Workshop on the Algorithmic Foundations of Robotics (WAFR),

2024.

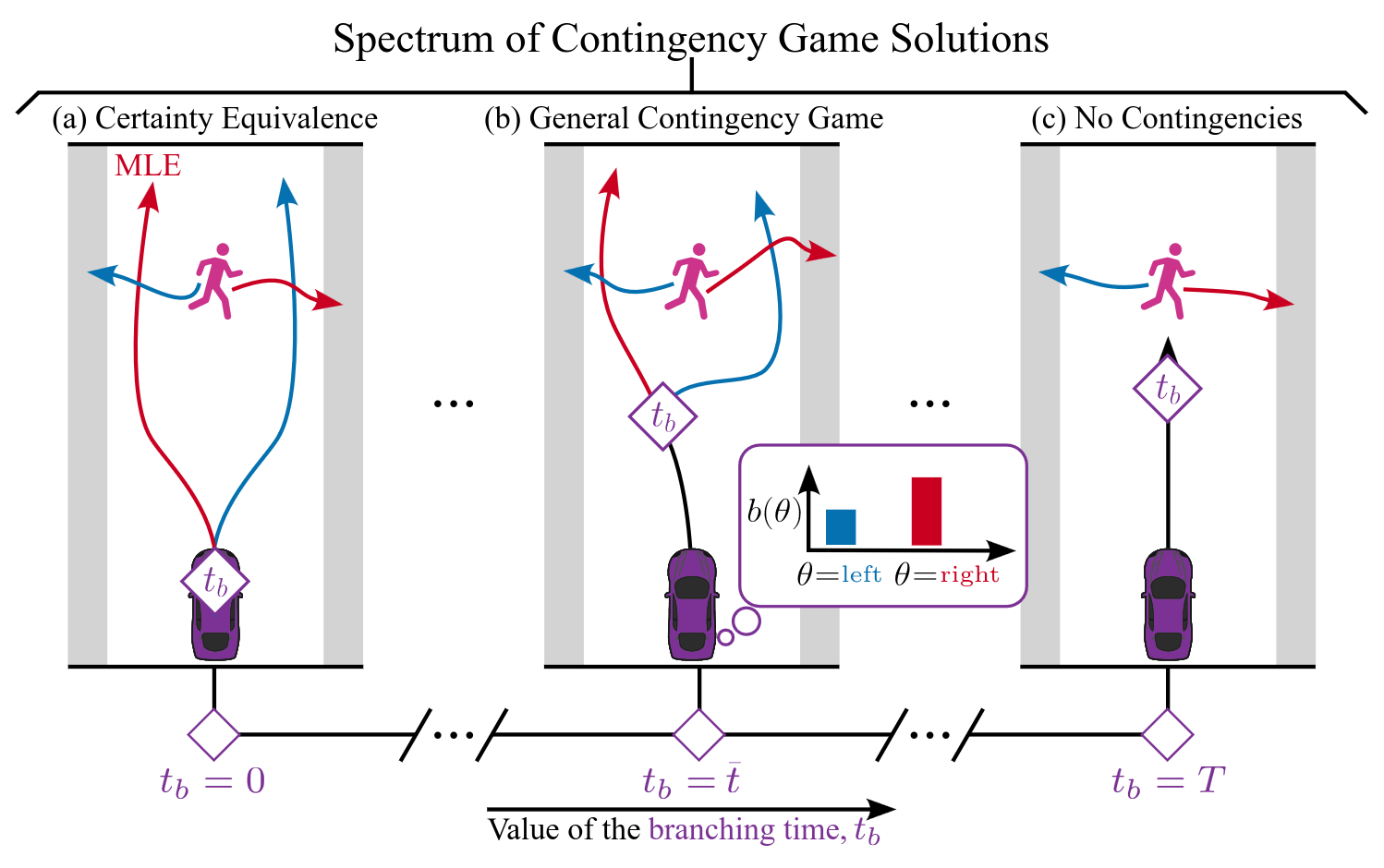

Contingency Games for Multi-Agent Interaction

In Robotics and Automation Letters (RA-L),

2024.

Online and offline learning of player objectives from partial observations in dynamic games

In The International Journal of Robotics Research (IJRR),

2023.

Learning to Play Trajectory Games Against Opponents with Unknown Objectives

In IEEE Robotics and Automation Letters (RA-L),

2023.

Learning Mixed Strategies in Trajectory Games

In , Proc. of Robotics: Science and Systems (RSS),

2022.